The 2024 edition of Mirror, Mirror was constructed using the methodological approach initially developed for the 2017 edition and subsequently used for the 2021 edition.34 In 2024, this approach was once again informed by an expert advisory panel convened to review measures, data, and methods for the report.35

Mirror, Mirror is unique in its heavy reliance on survey measures designed to capture the perspectives of patients and professionals — the people who experience health care in each country. Nearly three-quarters of the report’s measures are derived from patient or physician reports of health system performance.

Data

Survey data are drawn from Commonwealth Fund International Health Policy Surveys fielded during 2021, 2022, and 2023. Since 1998, in close collaboration with international partners, the Commonwealth Fund has supported these surveys of the public’s and primary care physicians’ experiences of their health care systems. Each year, in collaboration with researchers and partners in the 10 countries, a common questionnaire is developed, translated, adapted, and pretested. In 2021 we surveyed adults age 65 and older. The 2022 survey was of primary care physicians and the 2023 survey was of the general population (adults age 18 and older). The 2021 and 2023 surveys examined patients’ views of the health care system, quality of care, care coordination, patient–physician communication, wait times, and access problems. The 2022 survey examined primary care physicians’ experiences providing care to patients, use of information technology, and use of teams to provide care.

The Commonwealth Fund International Health Policy Surveys (2021, 2022, and 2023) include nationally representative samples drawn at random from the populations surveyed. The 2021 and 2023 surveys’ sampling frames were generated using probability-based overlapping landline and mobile phone sampling designs and, in some countries, listed or nationwide population registries. The 2022 survey of primary care physicians was drawn from government or private company lists of practicing primary care doctors in each country, except in France, where the sample was selected from publicly available lists of primary care physicians. Within each country, experts defined the physician specialties responsible for primary care, recognizing that roles, training, and scopes of practice vary across countries. In all countries, general practitioners (GPs) and family physicians were included, with internists and pediatricians also sampled in Switzerland and the United States. Appendix 9 presents the number of respondents and response rates for each survey. Further details of the survey methods are described elsewhere.36

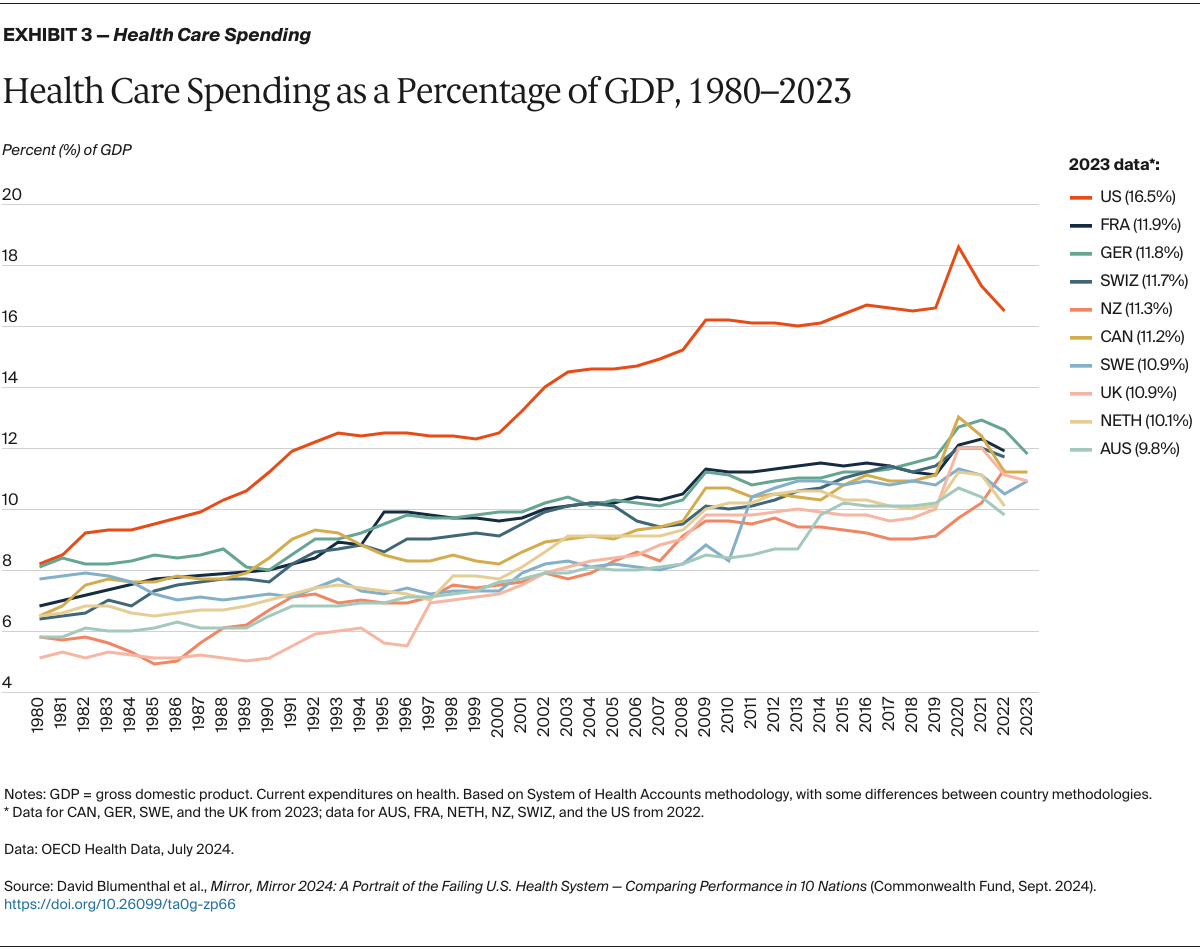

In addition to the survey items, standardized data were drawn from recent reports of the Organisation for Economic Co-operation and Development (OECD), Our World in Data, the World Health Organization (WHO), publicly and not publicly available country-specific mortality data, the peer-reviewed literature, and from the U.S. Agency for Healthcare Research and Quality.

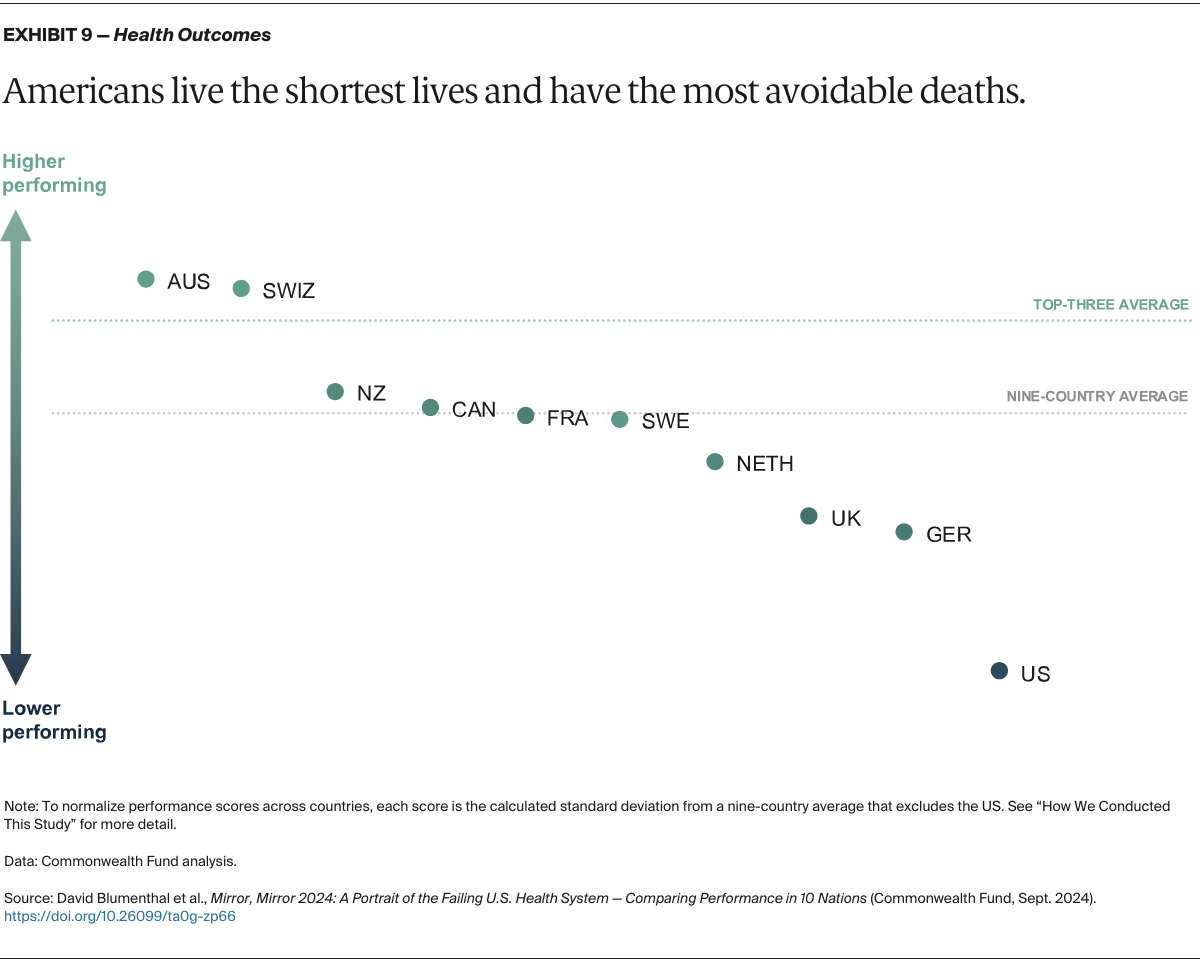

In the health outcomes domain of the 2024 report, treatable and preventable mortality data were calculated by pulling ICD-10 coded mortality data according to the OECD’s definitions of preventable and treatable mortality across all 10 countries. The respective national registries providing data for each country were: the Australian Bureau of Statistics (ABS, Australia); Statistics Canada (Canada); Le Centre d'épidémiologie sur les causes médicales de décès (CépiDc, France); Destatis (Federal Statistical Office Germany, Germany); Centraal Bureau voor de Statistiek (Statistics Netherlands, the Netherlands); Health New Zealand (New Zealand); Socialsytrelsen (Sweden); Federal Office of Public Health (FOPH, Switzerland) and the Center for Disease Control and Prevention’s WONDER online database (CDC Wonder, U.S.). For the United Kingdom, we combined data from the Northern Ireland Statistics and Research Agency (NISRA, Northern Ireland), the National Records of Scotland (NRS, Scotland), and the Office for National Statistics (ONS, England & Wales). Note that 2021 mortality data from New Zealand are preliminary as there are significant delays in finalizing the results on official cause of death, and, as a result, present underestimates of preventable and treatable mortality.

Changes Since 2021

The majority of measures included in this report are the same as in the 2021 edition of Mirror, Mirror (see Appendix 2). Four 2021 measures were dropped if a survey question was no longer included in the Commonwealth Fund International Health Policy Survey. Nine measures were considered “modified” in the 2024 report because the wording of a survey item was altered, or the measures were combined due to high correlation.

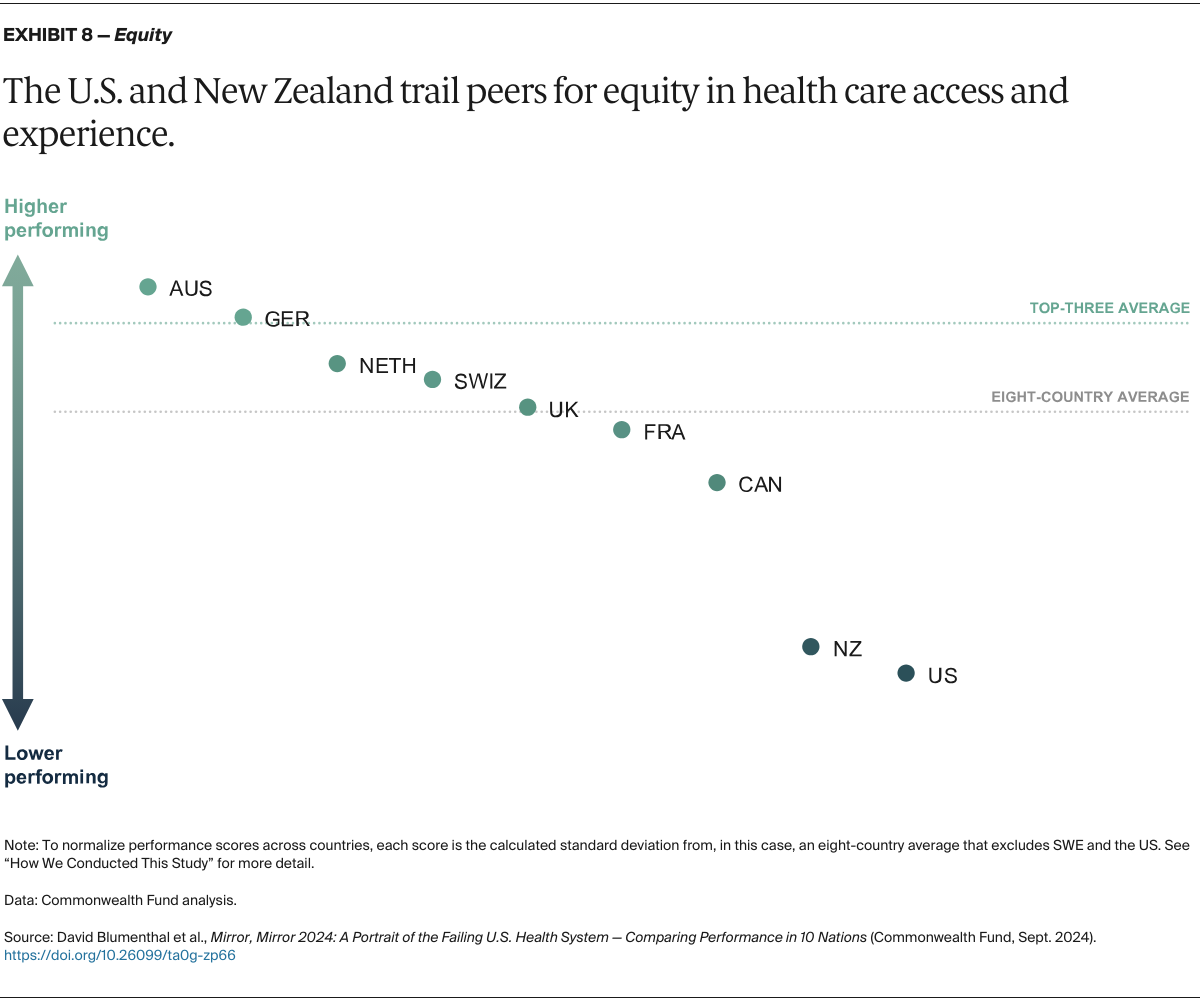

In 2022, Norway exited the International Health Policy Survey. In the more recent suite of surveys, new privacy laws in Sweden prohibited collection of income data which prevented inclusion of Sweden in our income-related equity analyses.

We worked to include new measures to fill previously identified gaps in performance measurement across the 10 countries and to capture newly relevant topics such as telehealth, discrimination experienced by patients, physician burnout, and the effects of the COVID-19 pandemic. Measures related to wait times that were excluded from the 2021 report because they were fielded in the early days of the COVID-19 pandemic were added back to this edition. In selecting new measures, we took into account the availability and timeliness of the data, and how they correlated with other measures in each domain. In the end we included 17 new measures across the five domains (see “How We Measured Performance” for details).

Analysis

The method for calculating performance scores and rankings is similar to that used in the 2021 and 2017 reports, except that we modified the calculation of relative performance because the U.S. was a distinct and substantial outlier (see below).

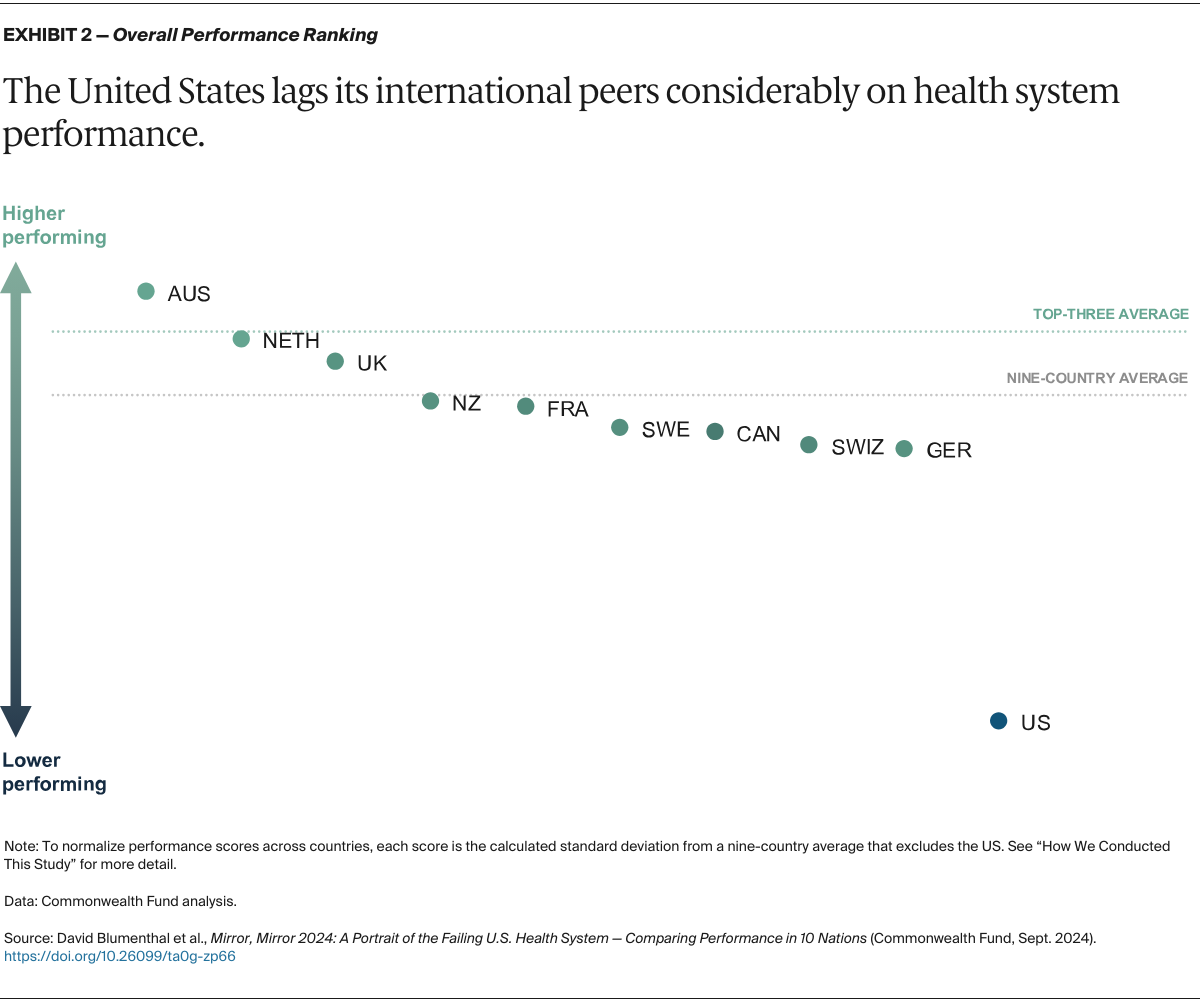

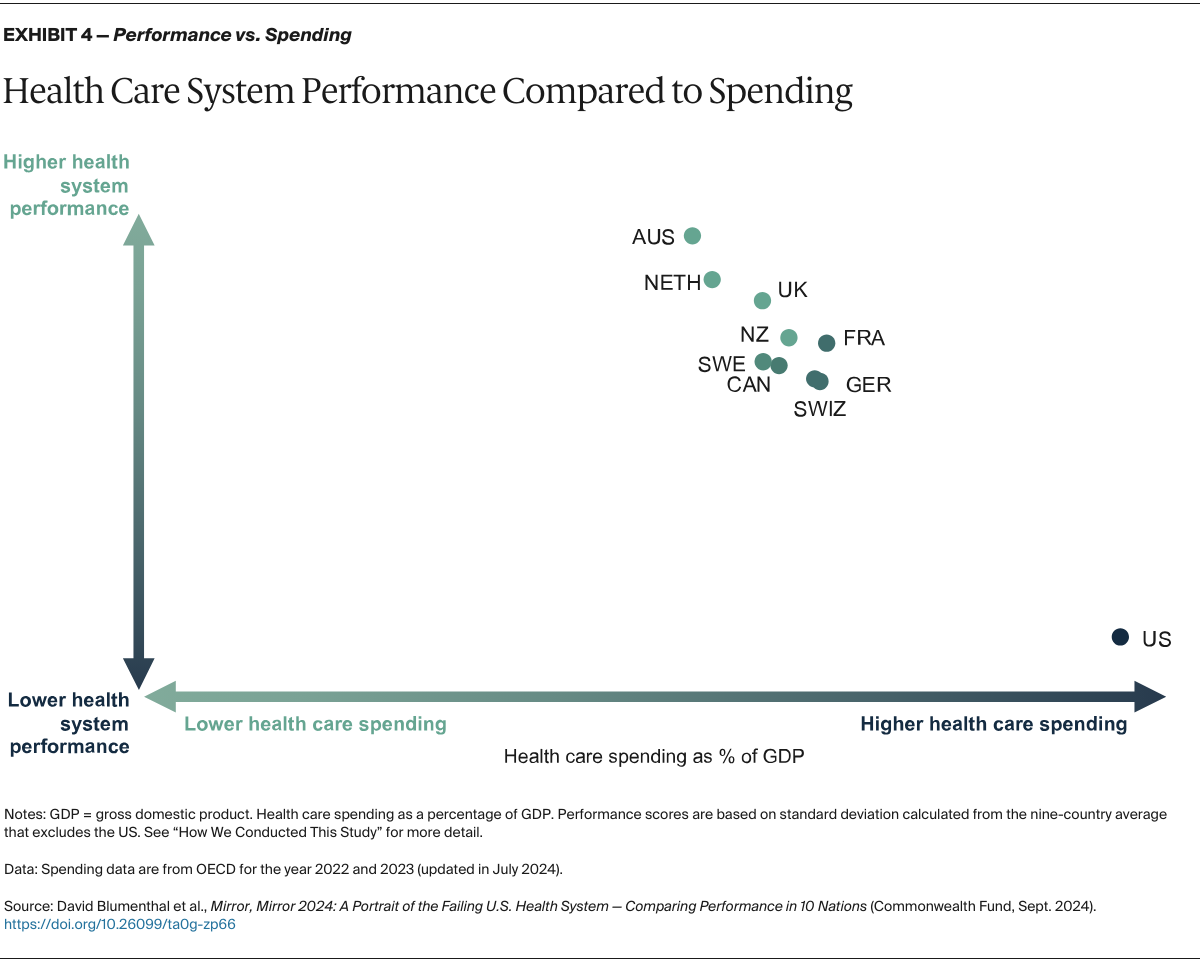

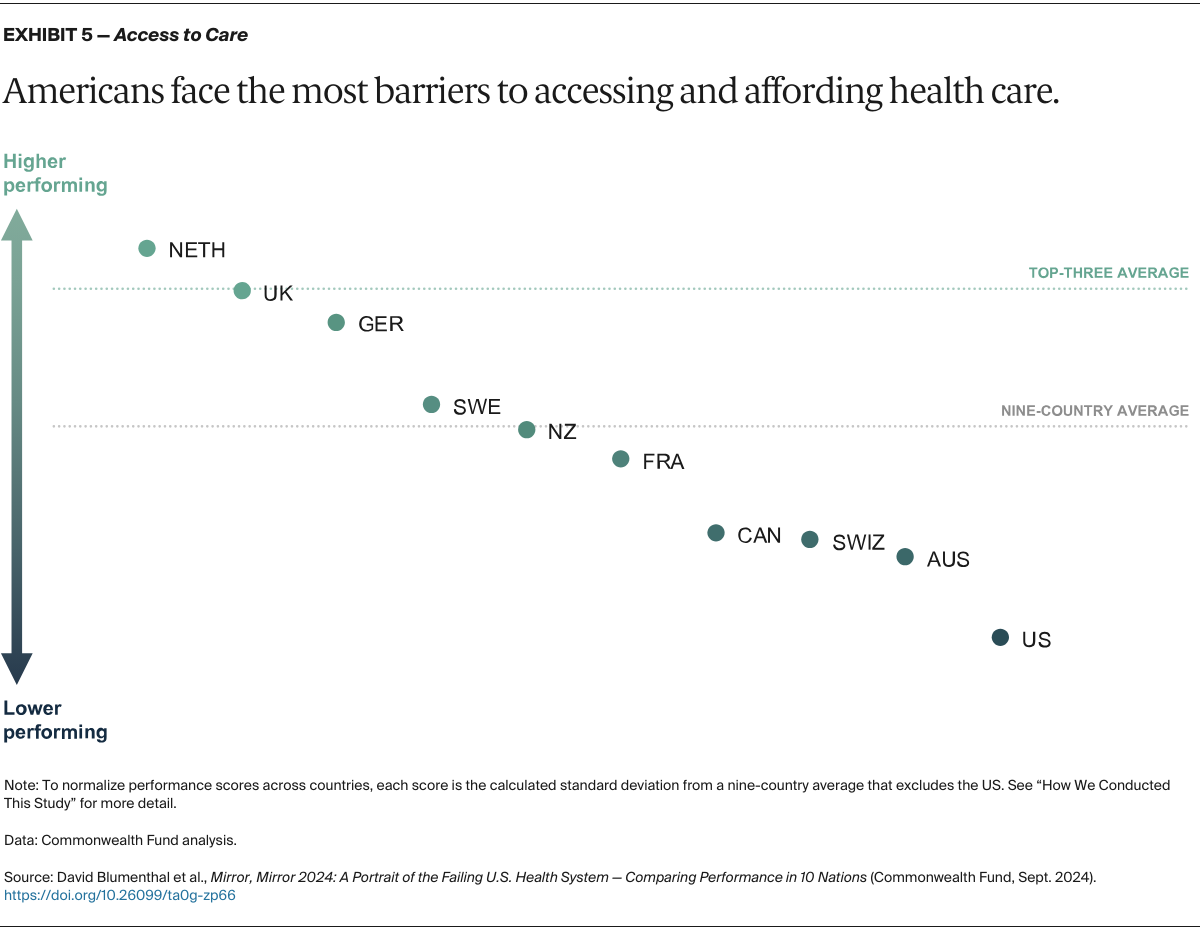

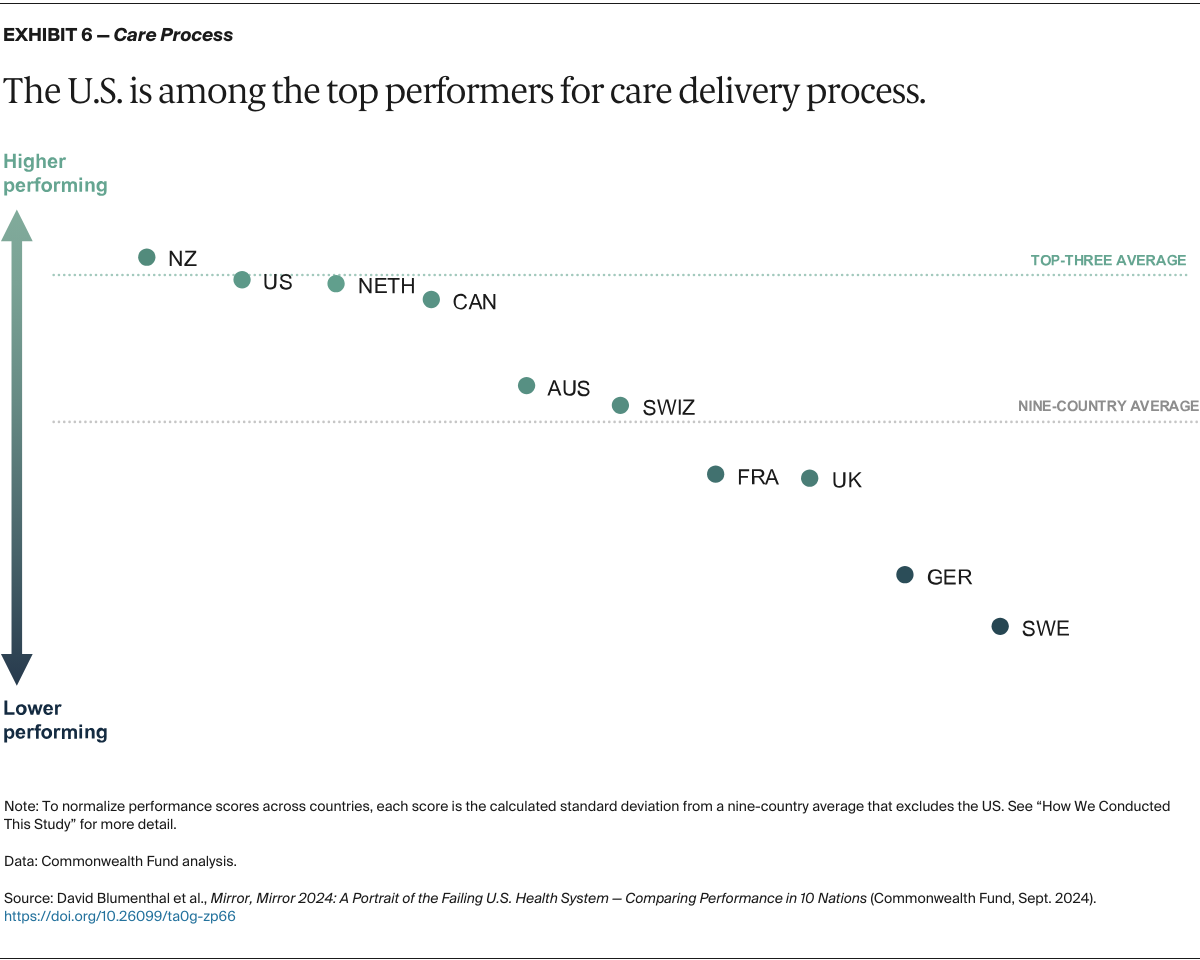

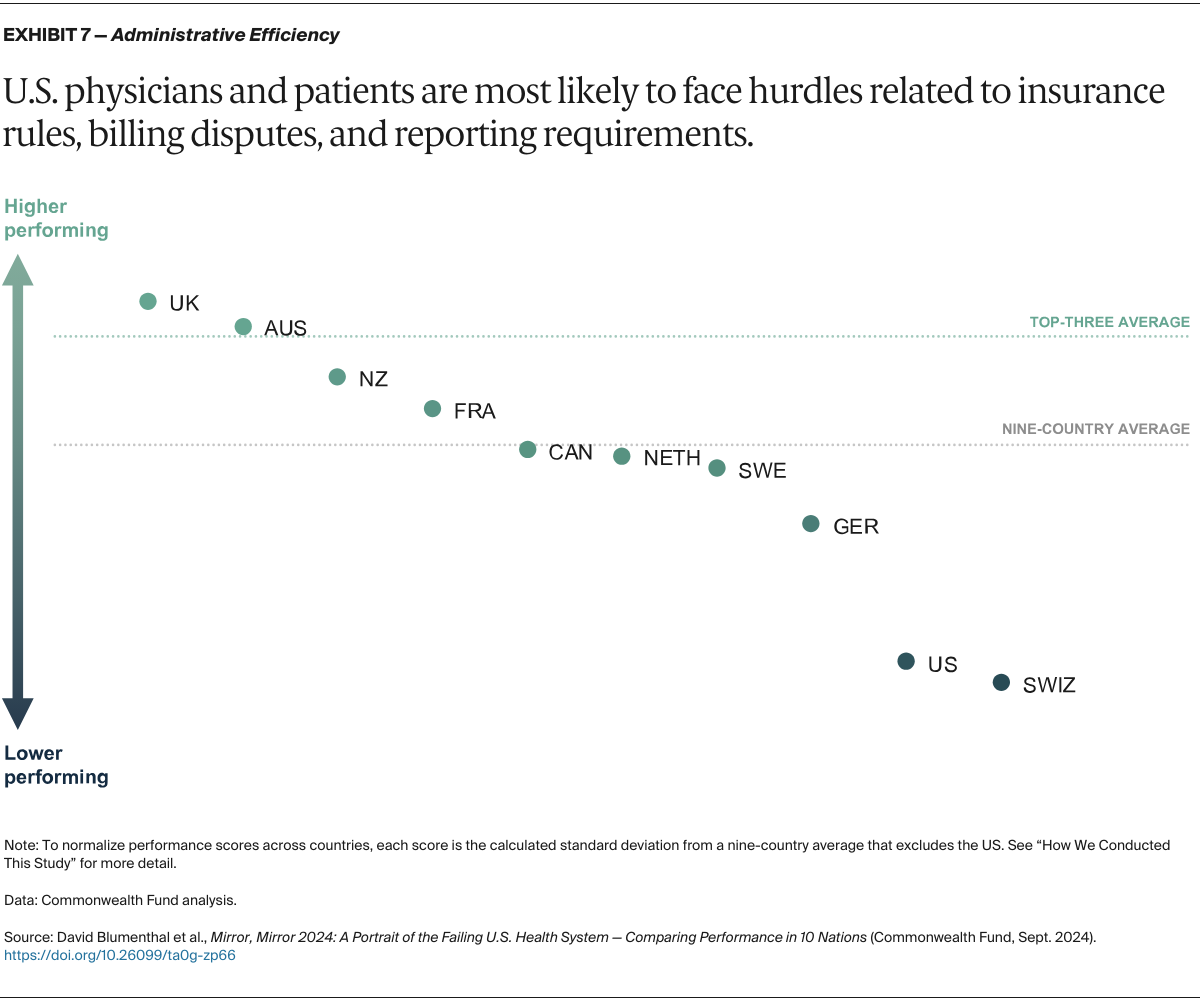

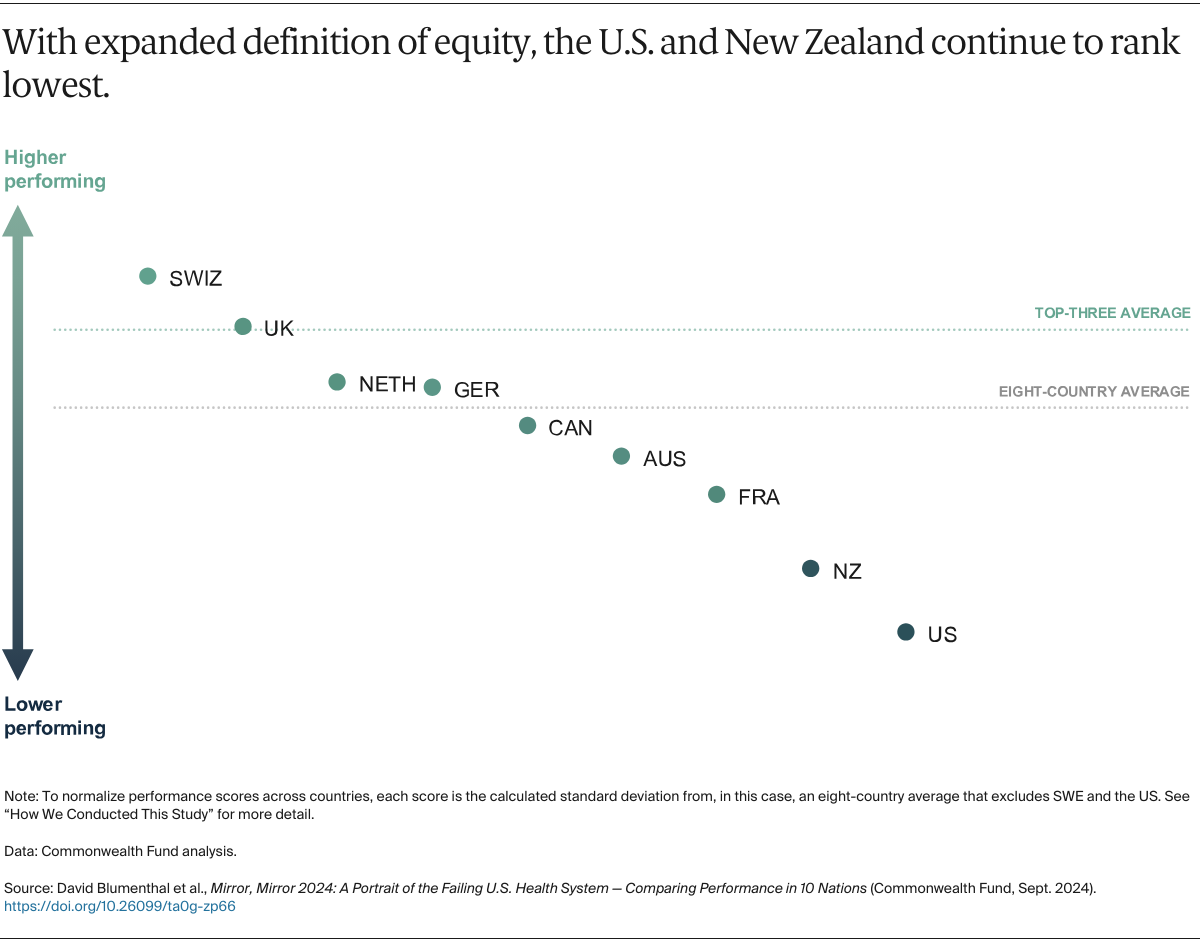

Normalized indicators: For each measure, we converted each country’s result (e.g., the percentage of survey respondents giving a certain response or a mortality rate) to a measure-specific, normalized performance score, which we refer to as normalized indicators. These indicators were calculated as the difference between the country result and the nine-country mean (excluding the U.S.), divided by the standard deviation of the results for each measure (see Appendices 4 through 8). Normalizing the results based on the standard deviation accounts for differences between measures in the range of variation among country-specific results. A positive normalized indicator indicates the country performs above the group average; a negative indicator indicates the country performs below the group average. Performance scores in the equity domain were based on the difference between higher-income and lower-income groups, and in the expanded equity domain were based on the difference between rural and nonrural groups and females and males, with a wider difference interpreted as a measure of lower equity between the groups in each country.

The normalized scoring approach assumes that results are normally distributed. In 2024, as in 2021, we noted that the U.S. was such a substantial outlier that it was negatively skewing the mean performance, violating the assumption. In past Mirror, Mirror reports, we included all 11 countries in calculating the mean and standard deviation of each measure. In the 2024 and 2021 editions, however, we conducted an outlier analysis (see below), which strongly suggested that it would be more accurate to exclude the U.S. from determining the mean performance and standard deviations of each measure. This modification changes a country’s performance scores relative to the mean but does not affect the ranking of countries relative to one another.

Correlations: In this edition, we conducted a Spearman rank correlation analysis on all the measures included in the final measure list. A correlation coefficient above 0.4 was used to determine whether or not to combine measures. For any measures found to be correlated above 0.4, within the same domain or subdomain, and where there were thematic similarities between the measures, they were combined. There was one instance of correlated measures being combined in the Access to Care domain, three in the Care Process domain, one in the Administrative Efficiency domain, and one in the Equity domain.

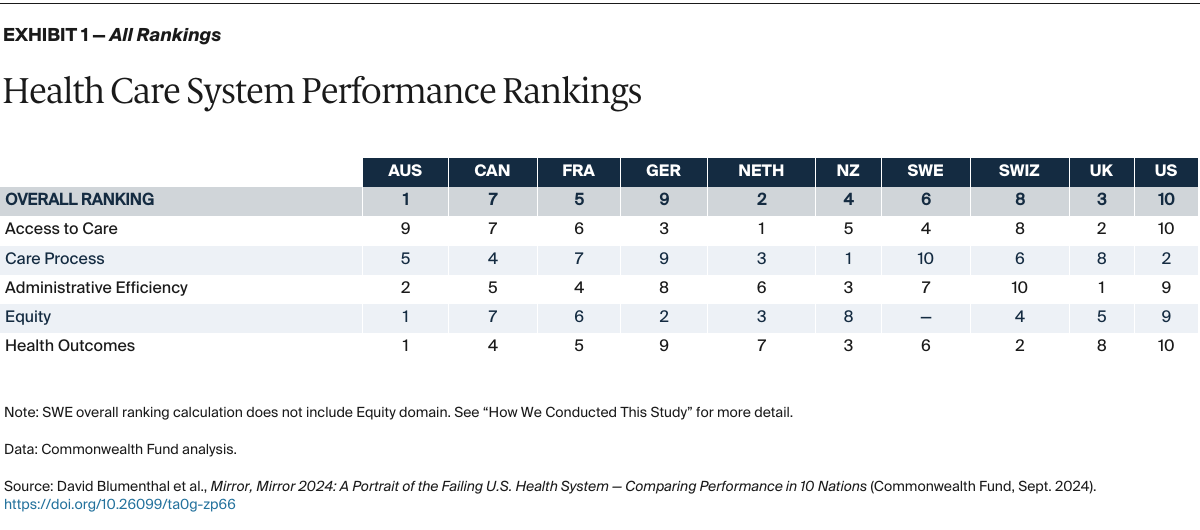

Domain performance scores and ranking: For each country, we calculated the mean of the normalized indicators in each domain. Then we ranked each country from 1 to 10 based on the mean domain performance score, with 1 representing the highest performance score and 10 representing the lowest performance score, with the exception of the “Equity” domain, where the scale was from 1 to 9 due to the removal of Sweden.

Overall performance scores and ranking: For each country, we calculated the mean of the five domain-specific performance scores. Then, we ranked each country from 1 to 10 based on this summary mean score, again with 1 representing the highest overall performance score and 10 representing the lowest overall performance score. Again, the “Equity” domain was ranked on a scale of 1 to 9 due to the removal of Sweden.

Outlier analysis: We applied Tukey’s boxplot method of detecting statistical outliers and identified that the U.S. was an outlier on overall performance. The U.S. was also a statistical outlier in the equity and health outcomes domains. The test identified isolated instances of other countries as statistical outliers on specific measures, but the pattern for other countries was inconsistent and the outlier differences were smaller than for the U.S.

Sensitivity analysis: We checked the sensitivity of the results to different methods of excluding the U.S. as an outlier. First, we excluded the U.S. from the calculation of the other countries’ domain and subdomain scores. We also removed the U.S. from the performance score calculation of each domain in which it was a statistical outlier on at least one indicator (otherwise keeping the U.S. in calculation of other domains where it was not an outlier; see Appendix 3). We also excluded the U.S. and other countries from the domains in which they were outliers, but the overall performance scores generated under this approach were similar to excluding the U.S. from every performance score calculation. Based on these results, we excluded the U.S. from the calculation of each country’s overall performance score. The U.S. was included in the calculation of its own overall performance score.

We tested the stability of the ranking method by running two tests based on Monte Carlo simulation to observe how changes in the measure set or changes in the results on some measures would affect the overall rankings. For the first test, we removed three measure results from the analysis at random and then calculated the overall rankings on the remaining 67 measure results, repeating this procedure for 1,000 combinations selected at random. For the second test, we reassigned at random the survey measure results derived from the Commonwealth Fund International Health Policy Surveys across a range of plus or minus 3 percentage points — approximately the 95 percent confidence interval for most measures — recalculating the overall rankings based on the adjusted data and repeating this procedure 1,000 times.

The sensitivity tests showed that the overall performance scores for each country varied but that the ranks clustered within several groups similar to those shown in Exhibit 1. Among the simulations, Australia, the Netherlands, and the United Kingdom were nearly always ranked among the three top countries; the U.S. was always ranked at the bottom, while Switzerland was also nearly always ranked toward the bottom. The other five countries varied in order between the fourth and eighth ranks. These results suggest that the selected ranking method was not highly sensitive to the choice of indicators.

Limitations

This report has limitations. Some are particular to our analysis, while some are inherent in any effort to assess overall health system performance. No international comparative report can encapsulate every aspect of a complex health care system. As described above, our sensitivity analyses suggests that comparative country rankings in the middle of the distribution (but not the extremes) are somewhat sensitive to small changes in the data or indicators included in the analysis, but these changes do not move these countries out of the middle group of the distribution.

Second, despite improvements in recent years, standardized cross-national data on health system performance are limited. The Commonwealth Fund surveys offer unique and detailed data on the experiences of patients and primary care physicians but do not capture important dimensions that might be obtained from medical records or administrative data. Furthermore, patients’ and physicians’ assessments might be affected by their expectations, which could differ by country and culture. Augmenting the survey data with standardized data from other international sources adds to our ability to evaluate population health and disease-specific outcomes, particularly regarding the impact of the COVID-19 pandemic. Some topics, such as hospital care and mental health care, are not well covered by currently available international data. Furthermore, it is very difficult to characterize performance of such institutions through surveys because no single individual has a full perspective on that performance, and surveying multiple respondents from representative samples of institutions is logistically challenging and extremely costly.

Third, we base our assessment of overall health system performance on five domains — access to care, care process, administrative efficiency, equity, and health outcomes — which we weight equally in order to calculate each country’s overall performance score. We recognize that there is a limitation around care process in that we do not measure quality for acute care conditions, especially in hospitals. Work related to this is underway but would always suffer from limits of generalizability because of limits of studying every conceivable diagnosis.

We also recognize that other elements of system performance, such as innovative potential or public health preparedness, are important. We continue to seek feasible standardized indicators to measure other domains. COVID results, included for the first time in this report, capture some aspects of public health preparedness and system resilience, but are also limited in many respects.

Fourth, in defining the five domains, we recognize that some measures could plausibly fit within several domains. The assignment of measures to domains was reviewed extensively internally and externally with an expert advisory panel. To inform action, country performance should be examined at the level of individual measures in addition to the domains we have constructed.